Yes i know that, the title of this blog post doesn’t sound very well. But if you read this blog post you can see that it fits very well. Recently, just some days before the writing of this post, one of my customers called me because of a vCenter issue. He’s got two HPE ProLiant DL380 Gen9 and an MSA 2040 SAS Dual Controller storage system.

The customer told me that some VM’s aren’t running anymore and that both hosts aren’t available in vCenter management. I made a quick look through remote support and saw that. Both hosts were gone, most of the VM’s still running but some not. We first tried to access the hosts through SSH but no success. We tried to access the DCUI with moderate success. At least we were able to logon, but the DCUI didn’t respond after successful login. That’s kind of strange, didn’t see that until yet. The hosts did respond to ping, so that’s at least a little light at the end of the tunnel.

We then decided to restart one host. We don’t have SSH (PuTTY) access to the hosts, we can’t manage them from vCenter, we can’t use the DCUI. What else could happen?

So we restarted a host. And this is the beginning of this story…

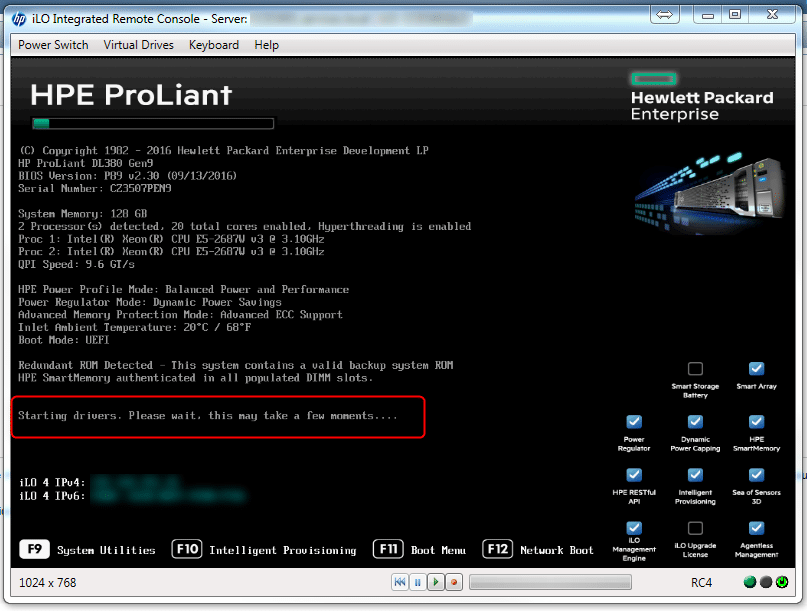

The screen above was actually all what we could see when we restarted the server. Pre-POST boot was successfull, then all the server sensors were initialized. We saw the notification “Starting drivers. Please wait, this may take a few moments…”. That’s all. We waited for “some moments” but nothing happened. The customer powered off and restarted the server but with the same issue.

Could it be a firmware / BIOS issue?

A quick hipshot to Google showed up a promising result. My friend Andreas Lesslhumer from running-system.com just had this issue recently, so he created a blog post to help us others when we should encounter this issue too. In his post it was all about System ROM and iLO firmware versions and compatibility. It looks that the recent iLO firmware 2.50 (which is included in the HPE Service Pack for ProLiant April 2017) has some issues.

First we tried to downgrade the iLO firmware to version 2.40 as mentioned in his blogpost. But unfortunately in our case it didn’t help. We tried to go a little lower to version 2.30 but that didn’t help neither. We also did a System ROM downgrade to version 2.30 but that didn’t help too.

I then contacted Andreas to ask him if i missed something. There was nothing missed or wrong. He told me that he just had this issue the same day when i had it, and a downgrade of the iLO firmware didn’t help in his case. So it’s not (only) to iLO firmware and / or compatibility related.

Anyway a great post from Andreas, and in certain situation, depending on your infrastructure, it can help to downgrade the iLO firmware!

What else could it be if not firmware or BIOS?

It is self explanatory that I have opened a support case at HPE. Even if you’re familiar with HPE servers and storage and work often with it i recommend you to open a support case. HPE will check logs for you, check spare parts and logistics and so on. I’m working in IT several years now and i saw many things. But I didn’t know this problem nor I knew the reason for it. So help through the manufacturer was needed.

Some time went by with up- and downgrades of iLO and System ROM firmware, rebooting and testing the servers if they now boot. But they didn’t. We checked also the SD cards which are installed into the server as boot drive for ESXi. We removed them but the server still didn’t boot. It was more a coincidence that we were thinking about “hey, let’s just unplug the storage and see if the server then will boot”.

So we unplugged the SAS cables from the servers and voilà, both servers booted without any problems.

Ok, it’s a HPE storage related issue…

Now we’re talking! At least the boot problems are caused by the HPE MSA storage. Let’s double check this now. We started basic troubleshooting. After we shutdown both servers, we only plugged in the SAS cable from controller 1 to ESXi server 1. The server bootet without problems. We repeated this step but with controller 2. The server didn’t boot. Ok then, one of the controller has an issue.

We restarted both storage controller and repeated the steps above. First boot with controller 1 connected, then second boot with controller 2 connected. And again the same picture. When controller 2 is connected, the server won’t boot.

We then unplugged the storage completely and did also a complete storage shutdown. We also removed the power cables and let it spin down for a moment. After we bootet up the storage again, we checked if the server boots with each controller. And it did! Shutting down the storage and making it powerless helped!

Both servers booted with each storage controller connected, and also when both controllers were connected!

What we figured out so far…

Let’s have quick look what we figured out until yet:

- It’s not a incompatibility between iLO firmware and System ROM

- SD card (ESXi boot media) were fine

- The servers boot without connected storage

- Both server boots with storage controller 1 connected

- Servers doesn’t boot with storage controller 2 connected

- So minimal configuration wasn’t necessary because server boots up without storage

- Restart of both storage controller worked but didn’t help

- Complete shutdown of the storage and making it powerless helped!

Now we knew how what’s the cause for the servers to not boot up properly or freeze in the POST screen and we were able to fix that.

Final steps to solve the issue

Finally we did a lot of firmware upgrades to make sure we’re on the most recent state with compatibility, functions and bug fixes. At the time of the release of this blog post we had this firmware versions installed on the servers and storage:

- System ROM: v2.40

- iLO: v2.54

- H241 SAS HBA: v5.04

- MSA 2040 SAS Dual Controller: GL220P009

System ROM, iLO and HBA firmware were part of the above mentioned HPE Service Pack for ProLiant (SPP) except the latest iLO version which we downloaded directly from HPE and updated it through iLO itself. Updating the storage firmware took a long time, probably 20 to 30 minutes per controller, but to be honest I didn’t measure the time. It took just long.

We did some final test after all the firmware upgrades and were finally able to properly boot the servers and to get the domain controller and vCenter back up and running. After that we successfully bootet all other VMs without any issues.

Conclusion

It took some hours to get this infrastructure back up and running. But i gained some good experiences. It could happen that there is some software / firmware incompatibility. But it doesn’t have to be. The causes can be so different. I would not have wondered if it was an external USB drive that caused the issue or any USB dongle thingy. But in our case it was the MSA storage causing the servers not to boot if it’s connected to them.

We were glad we figured that out and the customer is happy that the infrastructure is back online and all his services are running fine.

Thanks for your time to write this up.

It helped me to get one step further with the fault finding.

It is strange that the port 2 does this.

HP will be contacted.

Hi,

When removing the SAS drives do I need to disconnect the network before the start-up?

Thanks in advance,

Paul

Hi Paul,

I don’t see a need to remove the network before booting the server. As mentioned in my blog post, unplugging the SAS storage helped to boot the server. And with unplugging the SAS storage, I was able to find the root cause (the storage and its firmware).

Best regards,

Karl